DeepSeek-V2.5 A Brand New Open-Source Model Integrating General and Coding Abilities

DeepSeek-V2.5: A Brand New Open-Source Model Integrating General and Coding Abilities

Today, we have completed the integration of two models, DeepSeek-V2-Chat and DeepSeek-Coder-V2, and officially released DeepSeek-V2.5.

DeepSeek-V2.5 not only retains the general dialogue capabilities of the original Chat model and the powerful code processing capabilities of the Coder model but also better aligns with human preferences. In addition, DeepSeek-V2.5 has achieved significant improvements in many aspects, such as writing tasks and instruction following.

DeepSeek-V2.5 has now been fully launched on the web version and through the API. The API interface is backward-compatible, and users can access the new model through either deepseek-coder or deepseek-chat. At the same time, functions such as Function Calling, FIM Completion, and Json Output remain unchanged.

The all-in-one DeepSeek-V2.5 will bring users a more concise, intelligent, and efficient usage experience.

Upgrade History

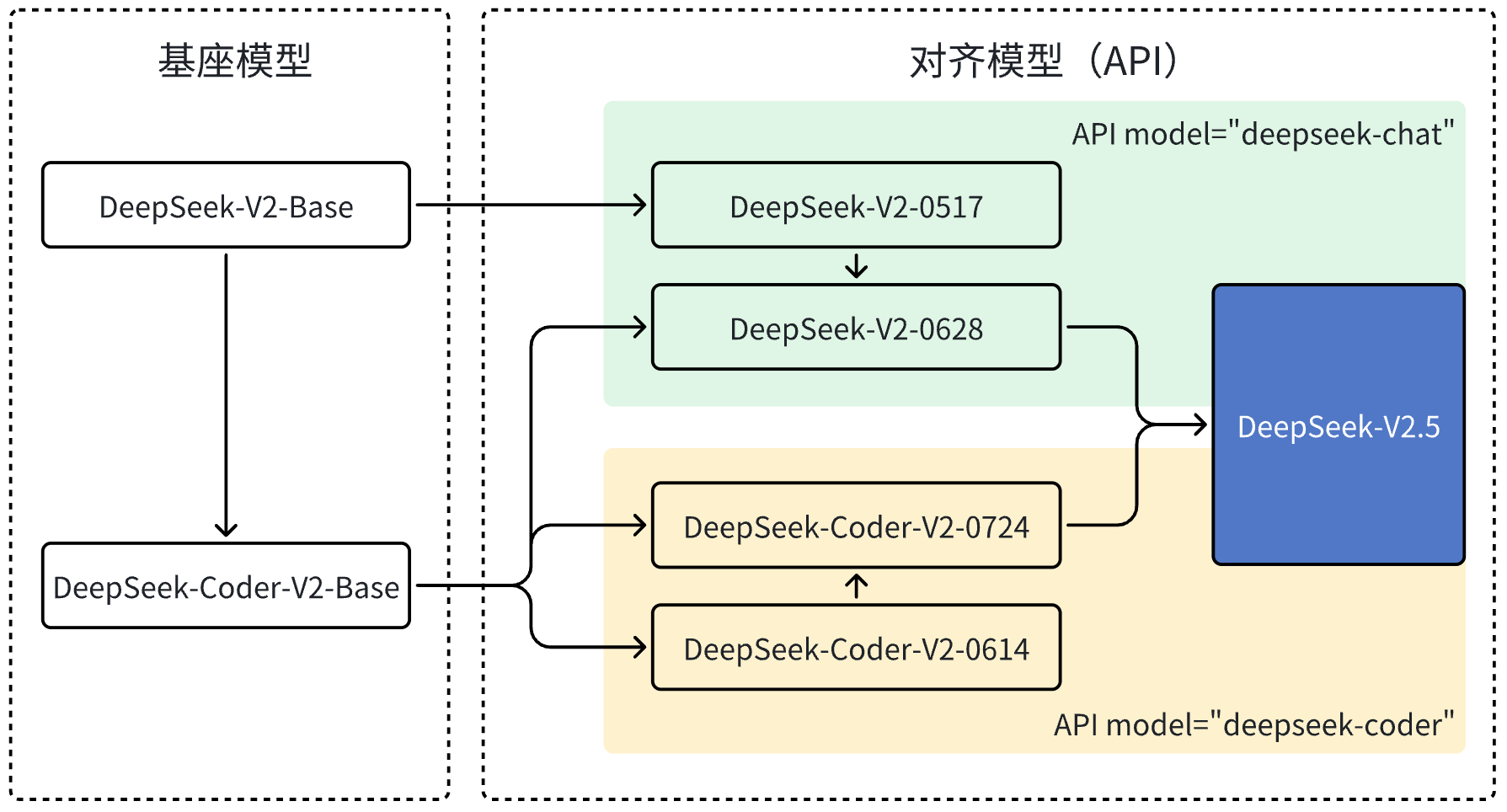

DeepSeek has always been focused on model improvement and optimization. In June, we carried out a major upgrade of DeepSeek-V2-Chat, replacing the original Chat’s Base model with the Base model of Coder V2, which significantly improved its code generation and reasoning capabilities, and released the DeepSeek-V2-Chat-0628 version. Immediately after that, based on the original Base model, DeepSeek-Coder-V2 greatly enhanced its general capabilities through alignment optimization and then launched the DeepSeek-Coder-V2 0724 version. Finally, we successfully merged the Chat and Coder models and introduced the brand new DeepSeek-V2.5 version.

Since there are relatively significant changes in this model version, if the performance in certain scenarios deteriorates, it is recommended to readjust the System Prompt and Temperature to achieve the best performance.

General Abilities

- General Ability Evaluation

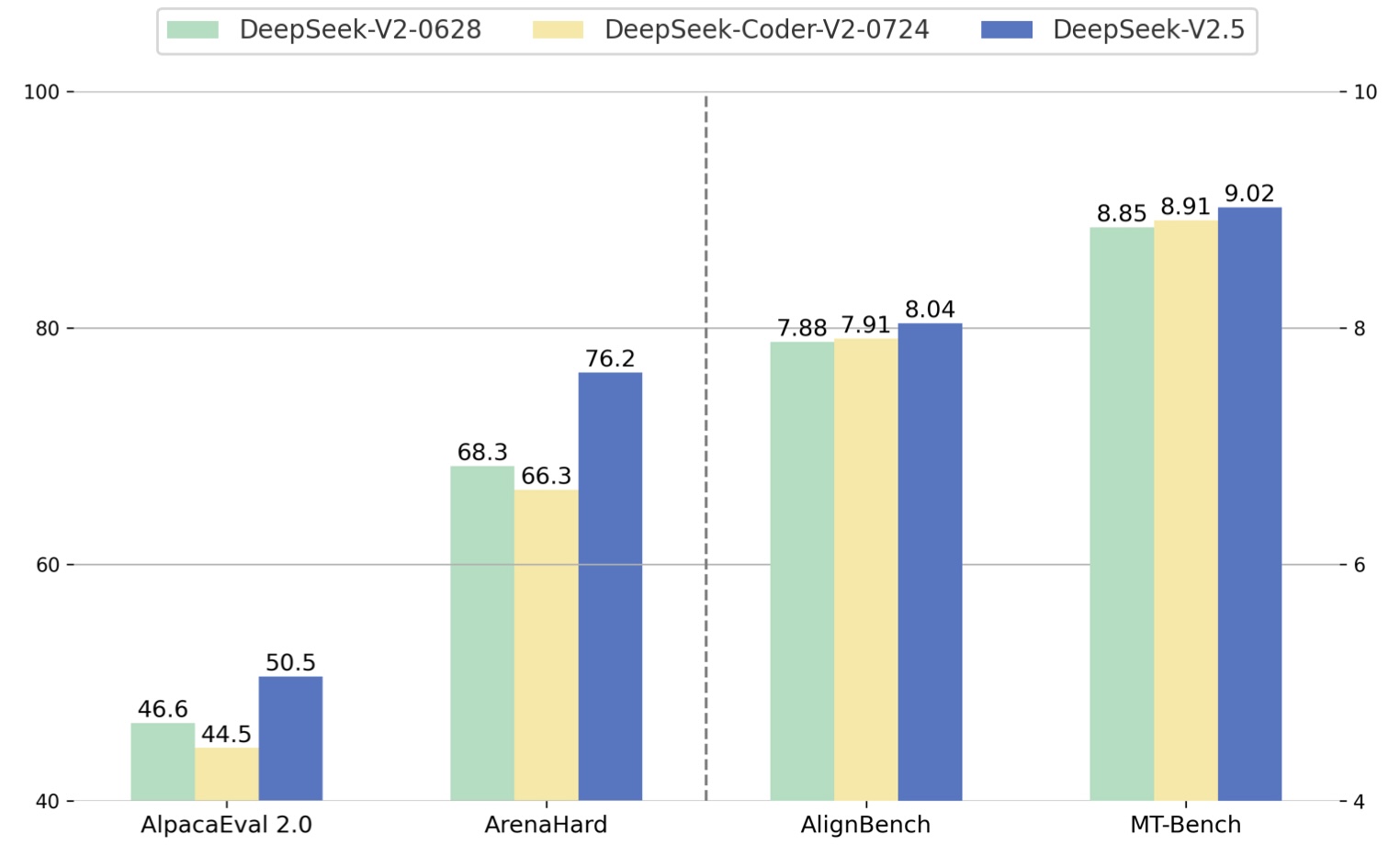

First, we used industry-standard test sets to evaluate the capabilities of DeepSeek-V2.5. On the four Chinese and English test sets, DeepSeek-V2.5 outperformed the previous DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724.

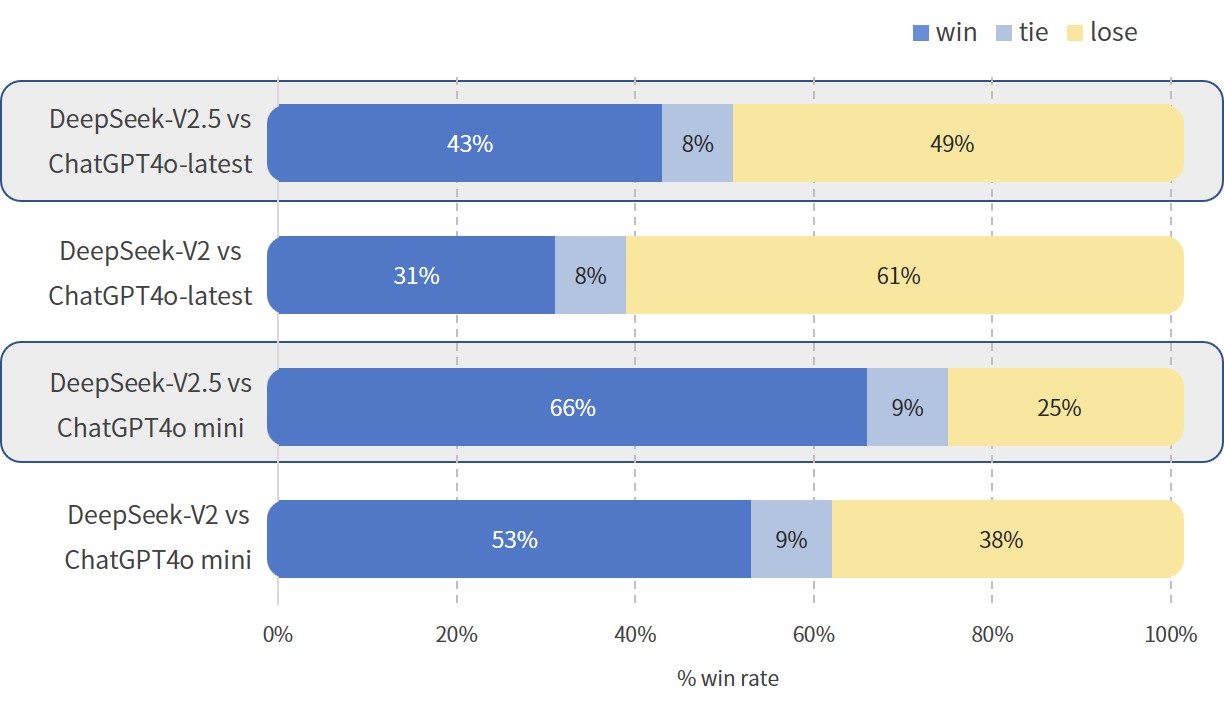

In our internal Chinese evaluation, the winning rates against GPT-4o mini and ChatGPT-4o-latest (with GPT-4o as the judge) have significantly increased compared to DeepSeek-V2-0628. This evaluation covers general abilities such as creation and question-answering, which will improve the user experience:

- Safety Ability Evaluation

The trade-off between Safety and Helpful has always been a key focus in our iterative development. In the DeepSeek-V2.5 version, we have made a clearer division of the boundaries of model security issues. While strengthening the security of the model against various jailbreak attacks, we have reduced the tendency of security policies to be overgeneralized to normal questions.

| Model | Comprehensive Safety Score (the higher, the better)* | Safety Spillover Ratio (the lower, the better)** |

|---|---|---|

| DeepSeek-V2-0628 | 74.4% | 11.3% |

| DeepSeek-V2.5 | 82.6% | 4.6% |

* Score based on the internal test set. The higher the score, the higher the overall security of the model.

** Score based on the internal test set. The lower the ratio, the less the impact of the model’s security policies on normal questions.

Coding Abilities

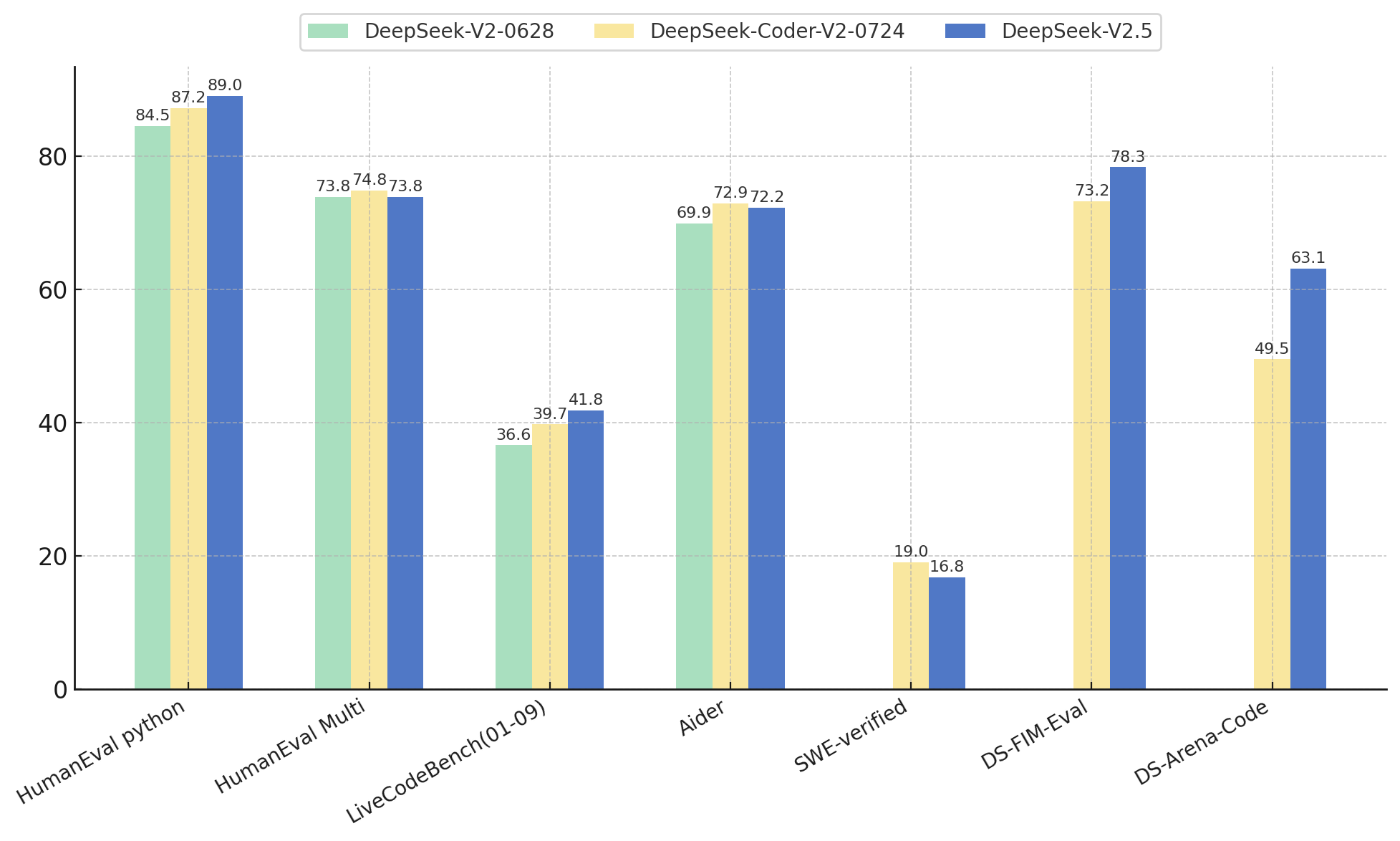

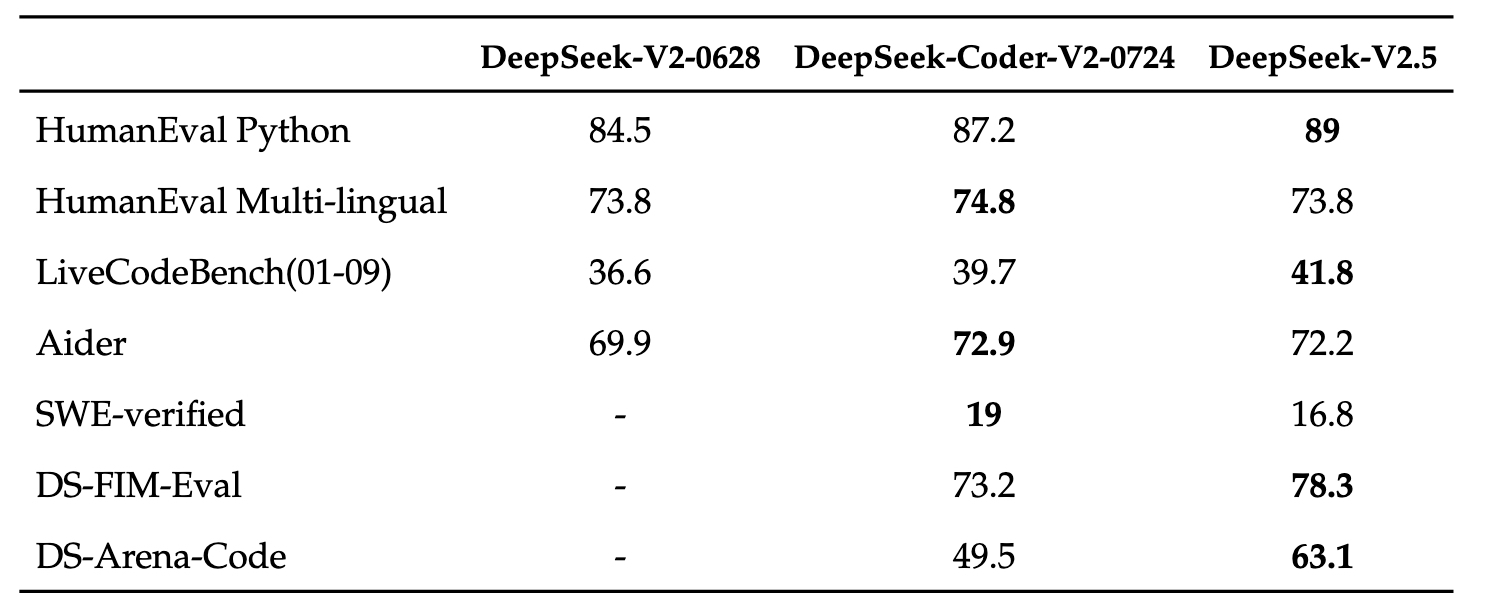

In terms of coding, DeepSeek-V2.5 retains the powerful coding capabilities of DeepSeek-Coder-V2-0724. In the HumanEval Python and LiveCodeBench (January 2024 - September 2024) tests, DeepSeek-V2.5 has shown relatively significant improvements. In the HumanEval Multilingual and Aider tests, DeepSeek-Coder-V2-0724 has a slight edge. In the SWE-verified test, the performance of both versions is relatively low, indicating that further optimization is still needed in this area. In addition, in the FIM completion task, the score of the internal evaluation set DS-FIM-Eval has increased by 5.1%, which can bring a better plugin completion experience.

In addition, DeepSeek-V2.5 has optimized common coding scenarios to improve actual usage performance. In the internal subjective evaluation DS-Arena-Code, the winning rate of DeepSeek-V2.5 against competitors (with GPT-4o as the judge) has significantly increased.

Model Open-Source

As always, adhering to the spirit of long-term open-source, DeepSeek-V2.5 has now been open-sourced on HuggingFace: